An embedded system is a special-purpose computer system designed to perform one or a few

dedicated functions1, sometimes with real-time computing constraints. It is usually embedded as

part of a complete device including hardware and mechanical parts. In contrast, a

general-purpose computer, such as a personal computer, can do many different tasks depending

on programming. Since the embedded system is dedicated to specific tasks, design engineers

can optimize it, reducing the size and cost of the product, or increasing the reliability and

performance. Some embedded systems are mass-produced, benefiting from economies of scale.

Physically, embedded systems range from portable devices such as digital watches and MP3

players, to large stationary installations like traffic lights, factory controllers, or the systems

controlling nuclear power plants. Complexity varies from low, with a single microcontroller chip, to

very high with multiple units, peripherals and networks mounted inside a large chassis or

enclosure.

In general, "embedded system" is not an exactly defined term, as many systems have some

element of programmability. For example, Handheld computers share some elements with

embedded systems - such as the operating systems and microprocessors which power them - but

are not truly embedded systems, because they allow different applications to be loaded and

peripherals to be connected.

Embedded systems span all aspects of modern life and examples of their use is

numerous.Telecommunications systems employ numerous embedded systems from telephone

switches for the network to mobile phones at the end-user. Computer networking uses dedicated

routers and network bridges to route data.Consumer electronics include personal digital

assistants (PDAs), mp3 players, mobile phones, videogame consoles, digital cameras, DVD

players, GPS receivers, and printers. More and more household appliances like the microwave

ovens and washing machines are including embedded systems to add advanced functionality.

Advanced HVAC systems use networked thermostats to more accurately and efficiently control

temperature that can change by time of day and season. Home automation uses wired- and

wireless-networking that can be used to control lights, climate, security, audio/visual, etc., all of

which use embedded devices for sensing and controlling.

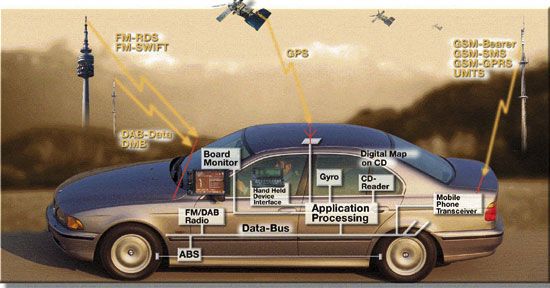

Transportation systems from flight to automobiles are also increasingly using embedded systems.

New airplanes contain advanced avionics such as inertial guidance systems and GPS receivers

that also have considerable safety requirements. Various electric motors — brushless DC motors,

induction motors and DC motors — are using electric/electronic motor controllers. Automobiles,

electric vehicles. and hybrid vehicles are increasingly using embedded systems to maximize

efficiency and reduce pollution. Other automotive safety systems such as anti-lock braking

system (ABS), Electronic Stability Control (ESC/ESP), and automatic four-wheel drive.Medical

equipment is continuing to advance with more embedded systems for vital signs monitoring,

electronic stethoscopes for amplifying sounds, and various medical imaging (PET, SPECT, CT,

MRI) for non-invasive internal inspections.

In the earliest years of computers in the 1940s, computers were sometimes dedicated to a single

task, but were too large to be considered "embedded". Over time however, the concept of

programmable controllers developed from a mix of computer technology, solid state devices, and

traditional electromechanical sequences.The first recognizably modern embedded system was the

Apollo Guidance Computer, developed by Charles Stark Draper at the MIT Instrumentation

Laboratory. At the project's inception, the Apollo guidance computer was considered the riskiest

item in the Apollo project. The use of the then new monolithic integrated circuits, to reduce the

size and weight, increased this risk.

The first mass-produced embedded system was the Autonetics D-17 guidance computer for the

Minuteman missile, released in 1961. It was built from transistor logic and had a hard disk for main

memory. When the Minuteman II went into production in 1966, the D-17 was replaced with a new

computer that was the first high-volume use of integrated circuits. This program alone reduced

prices on quad nand gate ICs from $1000/each to $3/each, permitting their use in commercial

products.

Since these early applications in the 1960s, embedded systems have come down in price. There

has also been an enormous rise in processing power and functionality. For example the first

microprocessor was the Intel 4004, which found its way into calculators and other small systems,

but required external memory and support chips.In 1978 National Engineering Manufacturers

Association released the standard for a programmable microcontroller. The definition was an

almost any computer-based controller. They included single board computers, numerical

controllers, and sequential controllers in order to perform event-based instructions.

By the mid-1980s, many of the previously external system components had been integrated into

the same chip as the processor, resulting in integrated circuits called microcontrollers, and

widespread use of embedded systems became feasible.As the cost of a microcontroller fell below

$1, it became feasible to replace expensive knob-based analog components such as

potentiometers and variable capacitors with digital electronics controlled by a small

microcontroller with up/down buttons or knobs. By the end of the 80s, embedded systems were

the norm rather than the exception for almost all electronics devices, a trend which has continued

since.

Embedded systems are designed to do some specific task, rather than be a general-purpose

computer for multiple tasks. Some also have real-time performance constraints that must be met,

for reason such as safety and usability; others may have low or no performance requirements,

allowing the system hardware to be simplified to reduce costs. Embedded systems are not always

separate devices. Most often they are physically built-in to the devices they control. The software

written for embedded systems is often called firmware, and is stored in read-only memory or Flash

memory chips rather than a disk drive. It often runs with limited computer hardware resources:

small or no keyboard, screen, and little memory.

Embedded systems range from no user interface at all - dedicated only to one task - to full user

interfaces similar to desktop operating systems in devices such as PDAs.Simple embedded

devices use buttons, LEDs, and small character- or digit-only displays, often with a simple menu

system.Soekris net4801, an embedded system targeted at network applications.1) Embedded

systems are designed to do some specific task, rather than be a general-purpose computer for

multiple tasks. Some also have real-time performance constraints that must be met, for reason

such as safety and usability; others may have low or no performance requirements, allowing the

system hardware to be simplified to reduce costs.

Embedded systems are not always separate devices. Most often they are physically built-in to the

devices they control. The software written for embedded systems is often called firmware, and is

stored in read-only memory or Flash memory chips rather than a disk drive. It often runs with

limited computer hardware resources: small or no keyboard, screen, and little memory.

Embedded processors can be broken into two distinct categories: microprocessors (µP) and

microcontrollers (µC). Microcontrollers have built-in peripherals on the chip, reducing size of the

system.There are many different CPU architectures used in embedded designs such as ARM,

MIPS, Coldfire/68k, PowerPC, x86, PIC, 8051, Atmel AVR, Renesas H8, SH, V850, FR-V, M32R, Z80,

Z8, etc. This in contrast to the desktop computer market, which is currently limited to just a few

competing architectures.PC/104 and PC/104+ are a typical base for small, low-volume embedded

and ruggedized system design. These often use DOS, Linux, NetBSD, or an embedded real-time

operating system such as MicroC/OS-II, QNX or VxWorks.

A common configuration for very-high-volume embedded systems is the system on a chip (SoC),

an application-specific integrated circuit (ASIC), for which the CPU core was purchased and added

as part of the chip design. A related scheme is to use a field-programmable gate array (FPGA),

and program it with all the logic, including the CPU.Embedded Systems talk with the outside world

via peripherals, such as: Serial Communication Interfaces (SCI): RS-232, RS-422, RS-485 etc

Synchronous Serial Communication Interface: I2C, JTAG, SPI, SSC and ESSI. Universal Serial Bus

(USB), Networks: Ethernet, Controller Area Network, LonWorks, etc, Timers: PLL(s),

Capture/Compare and Time Processing Units, Discrete IO: aka General Purpose Input/Output

(GPIO), Analog to Digital/Digital to Analog (ADC/DAC).

As for other software, embedded system designers use compilers, assemblers, and debuggers to

develop embedded system software. However, they may also use some more specific tools: In

circuit debuggers or emulators (see next section). Utilities to add a checksum or CRC to a

program, so the embedded system can check if the program is valid. For systems using digital

signal processing, developers may use a math workbench such as MATLAB, Simulink, MathCad,

or Mathematica to simulate the mathematics. They might also use libraries for both the host and

target which eliminates developing DSP routines as done in DSPnano RTOS and Unison Operating

System. Custom compilers and linkers may be used to improve optimisation for the particular

hardware. An embedded system may have its own special language or design tool, or add

enhancements to an existing language such as Forth or Basic. Another alternative is to add a

Real-time operating system or Embedded operating system, which may have DSP capabilities like

DSPnano RTOS.

Embedded Debugging may be performed at different levels, depending on the facilities available.

From simplest to most sophisticated they can be roughly grouped into the following

areas:Interactive resident debugging, using the simple shell provided by the embedded operating

system (e.g. Forth and Basic) , External debugging using logging or serial port output to trace

operation using either a monitor in flash or using a debug server like the Remedy Debugger which

even works for heterogeneous multicore systems. An in-circuit debugger (ICD), a hardware

device that connects to the microprocessor via a JTAG or NEXUS interface. This allows the

operation of the microprocessor to be controlled externally, but is typically restricted to specific

debugging capabilities in the processor.

An in-circuit emulator replaces the microprocessor with a simulated equivalent, providing full

control over all aspects of the microprocessor. A complete emulator provides a simulation of all

aspects of the hardware, allowing all of it to be controlled and modified, and allowing debugging

on a normal PC. Unless restricted to external debugging, the programmer can typically load and

run software through the tools, view the code running in the processor, and start or stop its

operation. The view of the code may be as assembly code or source-code.

Embedded systems often reside in machines that are expected to run continuously for years

without errors, and in some cases recover by themselves if an error occurs. Therefore the

software is usually developed and tested more carefully than that for personal computers, and

unreliable mechanical moving parts such as disk drives, switches or buttons are

avoided.Recovery from errors may be achieved with techniques such as a watchdog timer that

resets the computer unless the software periodically notifies the watchdog.

Specific reliability issues may include: The system cannot safely be shut down for repair, or it is

too inaccessible to repair. Solutions may involve subsystems with redundant spares that can be

switched over to, or software "limp modes" that provide partial function. Examples include space

systems, undersea cables, navigational beacons, bore-hole systems, and automobiles.

The system must be kept running for safety reasons. "Limp modes" are less tolerable. Often

backups are selected by an operator. Examples include aircraft navigation, reactor control

systems, safety-critical chemical factory controls, train signals, engines on single-engine aircraft.

The system will lose large amounts of money when shut down: Telephone switches, factory

controls, bridge and elevator controls, funds transfer and market making, automated sales and

service.

A real-time operating system (RTOS) is a multitasking operating system intended for real-time

applications. Such applications include embedded systems (programmable thermostats,

household appliance controllers, mobile telephones), industrial robots, spacecraft, industrial

control (see SCADA), and scientific research equipment.

An RTOS facilitates the creation of a real-time system, but does not guarantee the final result will

be real-time; this requires correct development of the software. An RTOS does not necessarily

have high throughput; rather, an RTOS provides facilities which, if used properly, guarantee

deadlines can be met generally (soft real-time) or deterministically (hard real-time). An RTOS will

typically use specialized scheduling algorithms in order to provide the real-time developer with the

tools necessary to produce deterministic behavior in the final system. An RTOS is valued more for

how quickly and/or predictably it can respond to a particular event than for the given amount of

work it can perform over time. Key factors in an RTOS are therefore a minimal interrupt latency

and a minimal thread switching latency.An early example of a large-scale real-time operating

system was the so-called "control program" developed by American Airlines and IBM for the

Sabre Airline Reservations System.

Time-sharing designs switch tasks more often than is strictly needed, but give smoother, more

deterministic multitasking, giving the illusion that a process or user has sole use of a machine.

Early CPU designs needed many cycles to switch tasks, during which the CPU could do nothing

useful. So early OSes tried to minimize wasting CPU time by maximally avoiding unnecessary

task-switches.More recent CPUs take far less time to switch from one task to another; the extreme

case is barrel processors that switch from one task to the next in zero cycles. Newer RTOSes

almost invariably implement time-sharing scheduling with priority driven pre-emptive scheduling.

In typical designs, a task has three states: 1) running, 2) ready, 3) blocked. Most tasks are

blocked, most of the time. Only one task per CPU is running. In simpler systems, the ready list is

usually short, two or three tasks at most.The real key is designing the scheduler. Usually the data

structure of the ready list in the scheduler is designed to minimize the worst-case length of time

spent in the scheduler's critical section, during which preemption is inhibited, and, in some cases,

all interrupts are disabled. But, the choice of data structure depends also on the maximum

number of tasks that can be on the ready list.

If there are never more than a few tasks on the ready list, then a simple unsorted bidirectional

linked list of ready tasks is likely optimal. If the ready list usually contains only a few tasks but

occasionally contains more, then the list should be sorted by priority, so that finding the highest

priority task to run does not require iterating through the entire list. Inserting a task then requires

walking the ready list until reaching either the end of the list, or a task of lower priority than that of

the task being inserted. Care must be taken not to inhibit preemption during this entire search; the

otherwise-long critical section should probably be divided into small pieces, so that if, during the

insertion of a low priority task, an interrupt occurs that makes a high priority task ready, that high

priority task can be inserted and run immediately (before the low priority task is inserted).

The critical response time, sometimes called the flyback time, is the time it takes to queue a new

ready task and restore the state of the highest priority task. In a well-designed RTOS, readying a

new task will take 3-20 instructions per ready queue entry, and restoration of the highest-priority

ready task will take 5-30 instructions. On a 20MHz 68000 processor, task switch times run about

20 microseconds with two tasks ready. 100 MHz ARM CPUs switch in a few microseconds.In more

advanced real-time systems, real-time tasks share computing resources with many non-real-time

tasks, and the ready list can be arbitrarily long. In such systems, a scheduler ready list

implemented as a linked list would be inadequate.

Multitasking systems must manage sharing data and hardware resources among multiple tasks. It

is usually "unsafe" for two tasks to access the same specific data or hardware resource

simultaneously. ("Unsafe" means the results are inconsistent or unpredictable, particularly when

one task is in the midst of changing a data collection. The view by another task is best done either

before any change begins, or after changes are completely finished.) There are three common

approaches to resolve this problem:

General-purpose operating systems usually do not allow user programs to mask (disable)

interrupts, because the user program could control the CPU for as long as it wished. Modern CPUs

make the interrupt disable control bit (or instruction) inaccessible in user mode to allow operating

systems to prevent user tasks from doing this. Many embedded systems and RTOSs, however,

allow the application itself to run in kernel mode for greater system call efficiency and also to

permit the application to have greater control of the operating environment without requiring OS

intervention.

On single-processor systems, if the application runs in kernel mode and can mask interrupts,

often that is the best (lowest overhead) solution to preventing simultaneous access to a shared

resource. While interrupts are masked, the current task has exclusive use of the CPU; no other

task or interrupt can take control, so the critical section is effectively protected. When the task

exits its critical section, it must unmask interrupts; pending interrupts, if any, will then execute.

Temporarily masking interrupts should only be done when the longest path through the critical

section is shorter than the desired maximum interrupt latency, or else this method will increase

the system's maximum interrupt latency. Typically this method of protection is used only when the

critical section is just a few source code lines long and contains no loops. This method is ideal for

protecting hardware bitmapped registers when the bits are controlled by different tasks.

When the critical section is longer than a few source code lines or involves lengthy looping, an

embedded/real-time programmer must resort to using mechanisms identical or similar to those

available on general-purpose operating systems, such as semaphores and OS-supervised

interprocess messaging. Such mechanisms involve system calls, and usually invoke the OS's

dispatcher code on exit, so they can take many hundreds of CGeneral-purpose operating systems

usually do not allow user programs to mask (disable) interrupts, because the user program could

control the CPU for as long as it wished. Modern CPUs make the interrupt disable control bit (or

instruction) inaccessible in user mode to allow operating systems to prevent user tasks from

doing this. Many embedded systems and RTOSs, however, allow the application itself to run in

kernel mode for greater system call efficiency and also to permit the application to have greater

control of the operating environment without requiring OS intervention.

On single-processor systems, if the application runs in kernel mode and can mask interrupts,

often that is the best (lowest overhead) solution to preventing simultaneous access to a shared

resource. While interrupts are masked, the current task has exclusive use of the CPU; no other

task or interrupt can take control, so the critical section is effectively protected. When the task

exits its critical section, it must unmask interrupts; pending interrupts, if any, will then execute.

Temporarily masking interrupts should only be done when the longest path through the critical

section is shorter than the desired maximum interrupt latency, or else this method will increase

the system's maximum interrupt latency. Typically this method of protection is used only when the

critical section is just a few source code lines long and contains no loops. This method is ideal for

protecting hardware bitmapped registers when the bits are controlled by different tasks.

When the critical section is longer than a few source code lines or involves lengthy looping, an

embedded/real-time programmer must resort to using mechanisms identical or similar to those

available on general-purpose operating systems, such as semaphores and OS-supervised

interprocess messaging. Such mechanisms involve system calls, and usually invoke the OS's

dispatcher code on exit, so they can take many hundreds of CPU instructions to execute, while

masking interrupts may take as few as three instructions on some processors. But for longer

critical sections, there may be no choice; interrupts cannot be masked for long periods without

increasing the system's interrupt latency.PU instructions to execute, while masking interrupts may

take as few as three instructions on some processors. But for longer critical sections, there may

be no choice; interrupts cannot be masked for long periods without increasing the system's

interrupt latency.